The Autonomous Exploration for Gathering Increased Science System, or AEGIS, is being developed by the Artificial Intelligence and Machine Learning Systems Groups at NASA’s Jet Propulsion Laboratory to provide automated targeting for remote sensing instruments on the Mars Exploration Rover (MER) mission.

Currently the MER Opportunity rover is trying to reach its next scientific target, a giant crater named Endeavour. The distance to Endeavour, about 12 kilometers (7 miles), is almost as great as the total distance Opportunity has traveled since landing on the Red Planet in 2004. Many long drives will be used to reach this target in a reasonable time frame.

The rovers have limited downlink capability, with more images than can be sent back or stored onboard. In addition, advances in rover navigation have created a situation in which traverse distances are increasing at a rate much faster than communications bandwidth. This trend means the quantity of data returned to Earth for each meter traversed is reduced. Thus, much of the terrain the rover observes on a long traverse may never be observed or examined by scientists. To address this issue, a new technology named AEGIS was developed to autonomously recognize and characterize high-value science targets during and after drives without requiring large amounts of data to be transmitted to Earth.

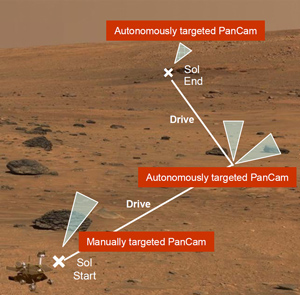

Targets for a rover’s remote-sensing instruments, especially those with a narrow field of view, are typically selected by human operators based on imagery already received on Earth. AEGIS enables the rover’s flight software to analyze imagery onboard in order to autonomously select and plan simple, targeted remote-sensing observations in an opportunistic fashion.

Tara Estlin is a senior member of the Artificial Intelligence Group, where she leads the development of AEGIS. She explains that, in the best case, manual targeting and creation of an imaging sequence means a delay of one communications cycle, which is about a day. In another case, the operations team might not receive images until days after the rover has passed a target of interest.

"This has happened with meteorites spotted in panoramic camera, or Pancam, images, where we’ve been well past them before they were identified and we’ve had to turn around and go back because the team felt they were important to investigate." The worst case, she says, is that they never get the images down. If the rover is on a long drive, the team might deem it necessary to delete some of the images taken for engineering purposes in order to make way for higher value data.

The Path to Autonomy

AEGIS operates by analyzing MER navigation camera, or Navcam, images to identify terrain features of interest – large, dark colored rocks, for example. The types of terrain characteristics to target are specified by scientists and uplinked to the robots on Mars.

Once it identifies a target in a Navcam image, AEGIS determines the target’s location and uses MER's Visual Target Tracking (VTT) technology to point a remote sensing instrument at that spot to take an additional, higher resolution measurement. The sequence can be run during and after traverses when the rover has driven into a new area for which no images have yet been downlinked to Earth. The team can direct the rover to run the AEGIS sequence at the end of a drive and to point its Navcam, for example, in the direction of the next drive. This capability is especially useful for multi-day plans where a drive is performed on the first day and only untargeted remote sensing can be performed on the second and third days, since another communication cycle with Earth has not yet occurred.

Estlin, who also works part-time driving the rovers, is careful to note that the autonomous capability does not replace the scientist’s trained eye. "Scientists are still the best at analyzing images and picking out science targets. They tell our software what to look for, like what makes an interesting rock in a given environment." The idea, she explains, is to give researchers new measurements at times when it is difficult to do so because the rover is in transit and has limited downlink and data storage capability. "I like to call it bonus science," she says.

Every time AEGIS runs onboard the rover, the team can pick a new set of parameters, such as rock albedo or size, to determine the rover’s focus. The scientists can decide what they think will be the most interesting set of characteristics in a given environment, and the rover will choose the best candidates fitting those parameters.

The AEGIS capability was developed as part of a larger autonomous science framework called OASIS (short for Onboard Autonomous Science Investigation System), which is designed to allow a rover to identify and react to serendipitous science opportunities. The AEGIS system takes advantage of the OASIS ability to detect and characterize interesting terrain features in rover images.

The Rollout

While the technology behind AEGIS and OASIS could be used to direct the activities of any remote sensing instrument, the strongest case is made for instruments with a more limited field of view. Therefore, the demonstration with Opportunity will use narrower-angle subframes of Pancam images, rather than full images.

"These are 13-spectral filter images, which give us a lot of information. And since AEGIS commands the PanCam to point directly at the target of interest, we can take a narrow-angle image instead of a full panoramic view. That makes these observations cheaper to downlink, in terms of bandwidth," says Estlin.

Drives with the Spirit rover tend to cover short distances, so AEGIS will be deployed first on Opportunity, which is the rover best able to make long drives at this time. Estlin says Opportunity is in a great situation to utilize this new capability, and she expects to have the AEGIS sequence running on the rover by the end of 2009.

The ChemCam Spectrometer on NASA’s 2011 Mars Science Laboratory, or MSL, rover also has a narrow field of view, and the AEGIS team anticipates their system will be useful to that mission as well. Estlin says the capability could be added in an update to the rover’s flight software after it is operational on Mars.

This work has been done with assistance from NASA's Mars Exploration Rover Project and with funding from the New Millennium Program, the Mars Technology Program, the JPL Interplanetary Network Development Program, and the Intelligent Systems Program.

References

- T. Estlin, R. Castano, R. C. Anderson, D. Gaines, B. Bornstein, C. de Granville, D. Thompson, M. Burl and M. Judd, "Automated Targeting for the MER Rovers," Proceedings of the Space Mission Challenges for Information Technology Conference (SMC-IT 2009), Pasadena, CA, July 2009.

- T. Estlin, R. Castano, D. Gaines, B. Bornstein, M. Judd, and R. C. Anderson, "Enabling Autonomous Science for a Mars Rover," Proceedings of the SpaceOps 2008 Conference, Heidelberg, Germany, May 2008.

- R. Castano, T. Estlin, R. C. Anderson, D. Gaines, A. Castano, B. Bornstein, C. Chouinard, M. Judd, "OASIS: Onboard Autonomous Science Investigation System for Opportunistic Rover Science," Journal of Field Robotics, Vol 24, No. 5, May 2007.